Large Language Model

We will look llm from Rust perspective.

ref: https://twitter.com/_aigeek/status/1717046220714308026/photo/1

Raw

Leader

- https://llm.extractum.io/static/llm-leaderboards/

Models

- Recommend: Mistral-7B.

- Long context: MistralLite

- With function call: Openhermes 2.5 Mistral 7B

- Coder: deepseek-ai/deepseek-coder-7b-instruct-v1.5

MoE

- Recommend: Mixtral-8x7B-Instruct-v0.1

Small Models

Multi-lang (Asia/Thai)

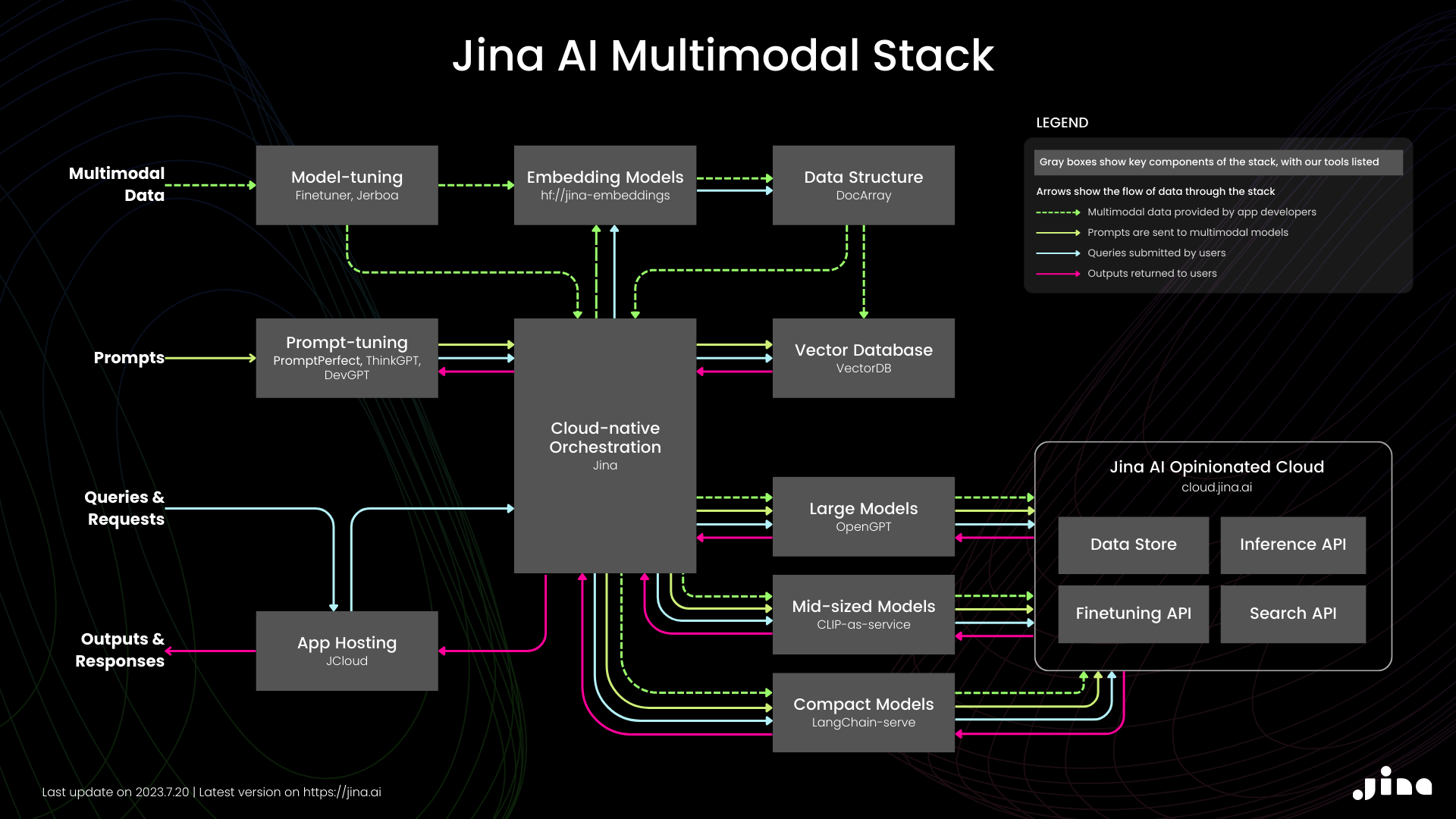

Embedding

- Sentence Embedding Jina AI: jina-embeddings-v2-base-en.

- Text Embeddings Inference

Dataset

Rust

Coding

- For coding related see: Replit Code V-1.5 3B

- Runner up: Tabby

- Runner up: CodeGPT

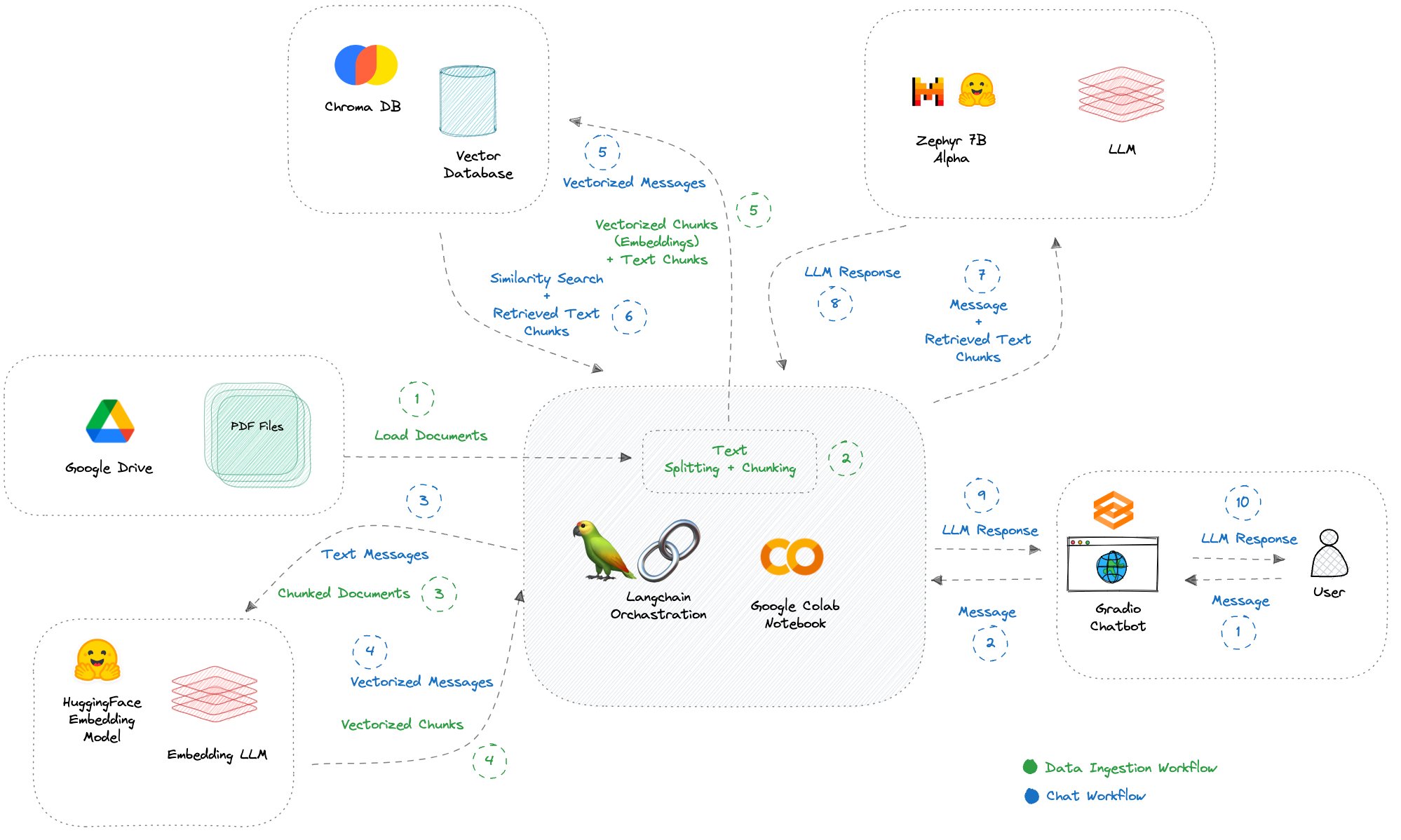

RAG

- Famous: Langchain

- Runner up: LlamaIndex

- Rust: llm-chain

- Rust/Wasm: LlamaEdge

Serve

- Rust/Wasm: LlamaEdge

- huggingface/text-generation-inference

- LoRAX: Multi-LoRA inference server: Based on TGI 👆.

- https://github.com/huggingface/llm-ls

Chat

- Rust/Wasm: LlamaEdge

- HuggingChat

Good read

- https://docs.mistral.ai/guides/basic-RAG/

- https://mlabonne.github.io/blog/

Overview

graph LR;

A("🐍 llama") --"4-bit"--> B("🐇 llama.cpp")

B --port ggml--> C("🦀 llm")

A --"16,32-bit"--> CC("🦀 RLLaMA")

A --Apple Silicon GPU--> AA("🐍 LLaMA_MPS")

C --"napi-rs"--> I("🐥 llama-node")

E --"fine-tuning to obey ix"--> D("🐇 alpaca.cpp")

E --instruction-following--> H("🐍 codealpaca")

A --instruction-following--> E("🐍 alpaca") --LoRa--> F("🐍 alpaca-lora")

B --BLOOM-like--> BB("🐇 bloomz.cpp")

BB --LoRA--> DDDD("🐍 BLOOM-LoRA")

D --"fine-tunes the GPT-J 6B"--> DD("🐍 Dolly")

D --"instruction-tuned Flan-T5"--> DDD("🐍 Flan-Alpaca")

D --Alpaca_data_cleaned.json--> DDDD

E --RNN--> EE("🐍 RWKV-LM")

EE("🐍 RWKV-LM") --port--> EEE("🦀 smolrsrwkv")

H --finetuned--> EE

EE --ggml--> EEEE("🐇 rwkv.cpp")

A --"GPT-3.5-Turbo/7B"--> FF("🐍 gpt4all-lora")

A --ShareGPT/13B--> AAAAA("🐍 vicuna")

A --Dialogue fine-tuned--> AAAAAA("🐍 Koala")

A --meta--> AAAAAAA("🐍 llama-2")

- 🐍 llama: Open and Efficient Foundation Language Models.

- 🐍 LLaMA_MPS: Run LLaMA (and Stanford-Alpaca) inference on Apple Silicon GPUs.

- 🐇 llama.cpp: Inference of LLaMA model in pure C/C++.

- 🐇 alpaca.cpp: This combines the LLaMA foundation model with an open reproduction of Stanford Alpaca a fine-tuning of the base model to obey instructions (akin to the RLHF used to train ChatGPT) and a set of modifications to llama.cpp to add a chat interface.

- 🦀 llm: Do the LLaMA thing, but now in Rust 🦀🚀🦙

- 🐍 alpaca: Stanford Alpaca: An Instruction-following LLaMA Model

- 🐍 codealpaca: An Instruction-following LLaMA Model trained on code generation instructions.

- 🐍 alpaca-lora: Low-Rank LLaMA Instruct-Tuning

// train 1hr/RTX 4090 - 🐥 llama-node: nodejs client library for llama LLM built on top of on top of llama-rs, llama.cpp and rwkv.cpp. It uses napi-rs as nodejs and native communications.

- 🦀 RLLaMA: Rust+OpenCL+AVX2 implementation of LLaMA inference code.

- 🐍 Dolly: This fine-tunes the GPT-J 6B model on the Alpaca dataset using a Databricks notebook.

- 🐍 Flan-Alpaca: Instruction Tuning from Humans and Machines.

- 🐇 bloomz.cpp: Inference of HuggingFace's BLOOM-like models in pure C/C++ built on top of the amazing llama.cpp.

- 🐍 BLOOM-LoRA: Low-Rank LLaMA Instruct-Tuning.

- 🐍 RWKV-LM: RWKV is an RNN with transformer-level LLM performance. It can be directly trained like a GPT (parallelizable). So it's combining the best of RNN and transformer - great performance, fast inference, saves VRAM, fast training, "infinite" ctx_len, and free sentence embedding.

- 🦀 smolrsrwkv: A very basic example of the RWKV approach to language models written in Rust by someone that knows basically nothing about math or neural networks.

- 🐍 gpt4all-lora: A chatbot trained on a massive collection of clean assistant data including code, stories and dialogue.

- 🐇 rwkv.cpp: a port of BlinkDL/RWKV-LM to ggerganov/ggml. The end goal is to allow 4-bit quanized inference on CPU.

// WIP - 🐍 vicuna: An Open-Source Chatbot Impressing GPT-4 with 90% ChatGPT Quality.

Tools

graph TD;

AAAA("ChatGPT")

AAAA --> AAA

AAAA ---> J("🦀 llm-chain")

AAAA --> I

AAA --> A

A("🐍 langchain")

A --port--> AA("🐥 langchainjs")

AA --> B("🐥 langchain-alpaca")

D("🐇 alpaca.cpp") --> B

E-..-D

E-..-DD("🐍 petals")

E("🐇 llama.cpp") --ggml/13B--> H

F("🐇 whisper.cpp") --whisper-small--> H

H("🐇 talk")

I("🐍 chatgpt-retrieval-plugin") --> II("🐍 llama-retrieval-plugin")

- 🐍 langchain: Building applications with LLMs through composability.

- 🐥 langchainjs: langchain in js.

- 🐥 langchain-alpaca: Run alpaca LLM fully locally in langchain.

- 🐇 whisper.cpp: High-performance inference of OpenAI's Whisper automatic speech recognition (ASR) model.

- 🐍 whisper-small: Whisper is a pre-trained model for automatic speech recognition (ASR) and speech translation. Trained on 680k hours of labelled data, Whisper models demonstrate a strong ability to generalise to many datasets and domains without the need for fine-tuning.

- 🐇 talk: Talk with an Artificial Intelligence in your terminal.

- 🐍 chatgpt-retrieval-plugin: The ChatGPT Retrieval Plugin lets you easily search and find personal or work documents by asking questions in everyday language.

- 🐍 llama-retrieval-plugin: LLaMa retrieval plugin script using OpenAI's retrieval plugin

- 🦀 llm-chain: prompt templates and chaining together prompts in multi-step chains, summarizing lengthy texts or performing advanced data processing tasks.

- 🐍 petals: Run 100B+ language models at home, BitTorrent-style. Fine-tuning and inference up to 10x faster than offloading.

ETC

Refer to: https://replicate.com/blog/llama-roundup

- Running LLaMA on a Raspberry Pi by Artem Andreenko.

- Running LLaMA on a Pixel 5 by Georgi Gerganov.

- Run LLaMA and Alpaca with a one-liner –

npx dalai llama - Train and run Stanford Alpaca on your own machine from replicate.

- Fine-tune LLaMA to speak like Homer Simpson from replicate.

- Llamero – A GUI application to easily try out Facebook's LLaMA models by Marcel Pociot.